Compatibility between PyTorch, CUDA, and xFormers versions

Learn how to fix dependency conflicts in Artificial Intelligence software.

Introduction

To run generative models on our local machine we use software that facilitates the inference process.

For text generation models such as Falcon, Llama 2 or models from the Llama family (Vicuna or Alpaca), we usually use the Oobabooga text-generation-webui or FastChat interface.

In the case of image generation models, or rather, in the case of Stable Diffusion, the most popular interfaces are Automatic1111's' Stable Diffusion web UI, Invoke AI or ComfyUI. For training or fine-tuning of these models, applications/extensions such as Dreambooth or Kohya SS are used.

All of these applications have one piece in common. They use PyTorch, a machine learning library written in Python. In turn, PyTorch is a dependency for other libraries used in some of these applications (such as xFormers). And if that were not enough, to get the most performance using the GPU instead of the CPU, we will need libraries such as CUDA in the case of NVIDIA graphics cards.

All of these libraries need to understand each other and not cause problems between incompatible versions, which is difficult until we get the hang of it. In this article we will see how to install the right dependencies for all the libraries we need and solve conflicts once and for all.

When you run installation commands via pip, remember to be in a virtual environment (venv) so that the libraries are not installed globally. Of course, you also need to have Python 3.8 or higher and python-pip installed.

Choose PyTorch version

The main thing is to select the PyTorch version that we need since this choice will condition all the other libraries. The version depends on the application we use .For example, in the case of Automatic1111's Stable Diffusion web UI, the latest version uses PyTorch 2.0.1. On the other hand, the latest version of InvokeAI requires PyTorch version 2.1.0. We can find this information in the project's requirements.txt file or in its documentation.

At the moment we will not install anything, we just note the PyTorch version that we will use. For this article we will use version 2.0.1.

CUDA

If we are going to use our graphics card the first thing is to install CUDA toolkit, a platform created by NVIDIA for parallel graphics processing. If we don't have an NVIDIA graphics card or we want to use the CPU (although it is much slower), we can skip this step. In addition, it is necessary to have the NVIDIA drivers installed.

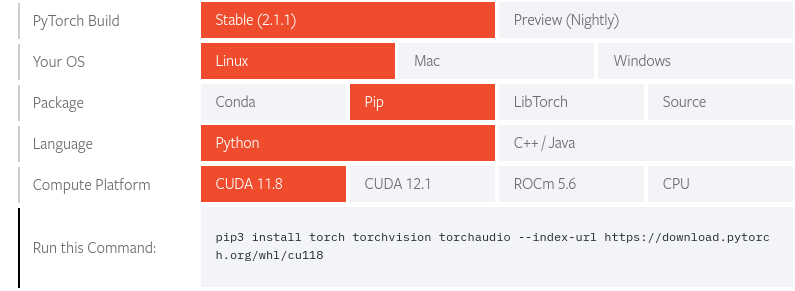

To find out which version of CUDA is compatible with a specific version of PyTorch, go to the PyTorch web page and we will find a table. If the version we need is the current stable version, we select it and look at the Compute Platform line below. Here are the CUDA versions supported by this version.

Right now the official stable version is 2.1.1 so this is not the version we want. To find other versions go to https://pytorch.org/get-started/previous-versions/.

In our case we look for the section called v2.0.1, then the Wheel subsection and inside Linux and Windows we will find this:

# ROCM 5.4.2 (Linux only)

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/rocm5.4.2

# CUDA 11.7

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2

# CUDA 11.8

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cu118

# CPU only

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cpuHere we can see how this version is compatible with CUDA 11.7 and 11.8. Let's choose CUDA 11.8. We reserve the PyTorch installation command for later, first we are going to install CUDA.

If we use Windows or Linux distributions based on Debian (Ubuntu) or Red Hat (Fedora or CentOS), we can go to the official download page, select the desired CUDA version and follow the installation instructions that will appear.

In the case of Arch Linux, we will download the .tar.* file of the version we need, through the archlinux package archive: https://archive.archlinux.org/packages/c/cuda/.

To install a specific version run the command sudo pacman -U PACKAGE.tar.xz, since pacman -S cuda will always install the latest version available.

Also, it is essential to add cuda to the IgnorePkg variable in /etc/pacman.conf file (IgnorePkg = cuda), in order to prevent this package from being updated when upgrading the system.

Therefore, since the latest version of CUDA 11.8 available on Arch Linux is cuda-11.8.0-1-x86_64.pkg.tar.zst, we download this file and run sudo pacman -U cuda-11.8.0-1-x86_64.pkg.tar.zst.

Checking

On Linux systems, to check that CUDA is installed correctly, many people think that the nvidia-smi command is used. This is not correct as this command shows the latest CUDA version that is compatible with the installed drivers. It will show a version even if we do not have CUDA toolkit installed.

nvidia-smiMon Nov 20 21:21:13 2023 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 545.29.02 Driver Version: 545.29.02 CUDA Version: 12.3 | |-------------------------------+----------------------+----------------------+ ...

The correct method to check the installed CUDA version is to use the nvcc --version command. We will get an output like this:

nvcc --versionnvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2022 NVIDIA Corporation Built on Wed_Sep_21_10:33:58_PDT_2022 Cuda compilation tools, release 11.8, V11.8.89 Build cuda_11.8.r11.8/compiler.31833905_0

In some Linux distributions the nvcc --version command detects the installed CUDA version but, when starting an application that uses CUDA, we will see errors such as:

CUDA_SETUP: WARNING! libcudart.so not found in any environmental path. Searching /usr/local/cuda/lib64...This happens because the variable LD_LIBRARY_PATH does not contain the necessary path. Look for the libcudart.so file on your system and add that path to the variable. In the case of Arch Linux I had to export this path: LD_LIBRARY_PATH=/opt/cuda/targets/x86_64-linux/lib:$LD_LIBRARY_PATH.

cuDNN

cuDNN is optional, but it is a library created by NVIDIA that offers improvements when working with neural networks. That is, it increases the efficiency of certain algorithms and we will get extra speed.

To access the downloads we will need a free account on NVIDIA's developer portal. You can also try to find the version you need without registration on the following page: https://developer.download.nvidia.com/compute/redist/cudnn/.

To install cuDNN on Windows or Debian/Red Hat based Linux distributions, we access the NVIDIA's developer portal archive (https://developer.nvidia.com/rdp/cudnn-download) and select the latest version of cuDNN compatible with the CUDA version that we have installed. As of today, cuDNN v8.9.6 is the latest version available for the 11.x CUDA branch.

Older versions of cuDNN can be found here: https://developer.nvidia.com/rdp/cudnn-archive.

In Arch Linux we don't need to download anything from the developer portal, nor do we need an account (although it's useful to check which cuDNN version we need). We will download the package from the archlinux archive as we did with CUDA. In this case we go to https://archive.archlinux.org/packages/c/cudnn/ and look for the version we need. In our case we download cudnn-8.9.6.50-1-x86_64.pkg.tar.zst and install it with sudo pacman -U cudnn-8.9.6.50-1-x86_64.pkg.tar.zst.

If you have trouble finding compatible versions you can refer to the cuDNN Support Matrix documentation page, where you will find compatibility tables between different combinations of operating systems, drivers and CUDA/cuDNN versions.

Checking

cuDNN just copies some files to the system, so if we search the system for the cudnn.h file and find it, it will have been installed correctly. On Linux we can search for it using find / -name cudnn.h.

PyTorch

Now we are going to install the chosen version of PyTorch. We access the [PyTorch] web page (https://pytorch.org/get-started/locally/) and we will find a table whose first option is the current stable version of PyTorch. If this is the version we need, we just have to continue choosing options until we receive the installation command that we will execute. In the Your OS row we choose our operating system, in the Package row we choose Pip, in the Language row we choose Python and in the Compute Platform row we choose the CUDA version we previously installed (or ROCm/CPU if applicable).

Currently the stable version is 2.1.1, so that is not the version we need for this article. To find other versions we access the page https://pytorch.org/get-started/previous-versions/. In our case we look for the section called v2.0.1, then the Wheel subsection and inside Linux and Windows (OSX if we use Apple) we will find the installation command according to our computing platform:

# ROCM 5.4.2 (Linux only)

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/rocm5.4.2

# CUDA 11.7

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2

# CUDA 11.8

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cu118

# CPU only

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cpuIn this article we chose to use CUDA 11.8 so we run that command.

If you are using Automatic1111's Stable Diffusion web UI, every time the application runs the libraries will be updated. You can prevent torch from updating by using the variable TORCH_COMMAND, using the same installation command as the value.

Example: TORCH_COMMAND="pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cu118"

Checking

To verify that PyTorch has been installed correctly, we run the following command:

python -c "import torch; print(torch.__version__)"2.0.1+cu118And if we want to check that PyTorch has access to the GPU via CUDA:

python -c "import torch; print(torch.cuda.is_available())"TruexFormers

xFormers is a library developed by Facebook that provides a series of improvements and optimizations when working with Transformers. For generative models such as Stable Diffusion, using xFormers can considerably reduce memory requirements. Most of the applications mentioned in the introduction are capable of using xFormers. Check the project documentation to find out.

The list of xformers versions can be found at https://pypi.org/project/xformers/#history.

Unfortunately I have not found any compatibility table between PyTorch versions and xFormers versions. If we do pip install xformers, it will install the latest xFormers version and also update PyTorch to the latest version, something we don't want.

Even so, it is quite easy to solve, just add xformers to the command with which we install PyTorch:

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 xformers --index-url https://download.pytorch.org/whl/cu118This way we force the installation of a version of xformers that is compatible with torch==2.0.1.

If you are using Automatic1111's Stable Diffusion web UI, every time the application runs the libraries will be updated. You can prevent xformers from updating by using the variable XFORMERS_PACKAGE.

Example: XFORMERS_PACKAGE="xformers==0.0.22.post7+cu118".

You can see the version that has been installed through pip list | grep xformers.

Checking

To verify that xFormers is installed correctly, we run the following command:

python -m xformers.infoxFormers 0.0.22 memory_efficient_attention.cutlassF: available memory_efficient_attention.cutlassB: available memory_efficient_attention.decoderF: available memory_efficient_attention.flshattF@v2.3.0: available memory_efficient_attention.flshattB@v2.3.0: available ...

We will see more information than what is shown here, but the important thing is to see available in the memory efficient attention lines.

If we see the text WARNING[XFORMERS]: xFormers can't load C++/CUDA extensions., it means that it has not been installed correctly.

Conclusion

In this article we have seen how to solve any versioning problem around these dependencies. Summarizing:

- Choose a PyTorch version according to the needs of the application we are going to use.

- Install CUDA if we want to take advantage of the performance that an NVIDIA GPU offers us. Install cuDNN to further speed up the software.

- Install PyTorch with the installation command provided by its website, choosing the appropriate computing platform.

- Install xFormers to reduce memory requirements if the application supports it.

You can support me so that I can dedicate even more time to writing articles and have resources to create new projects. Thank you!